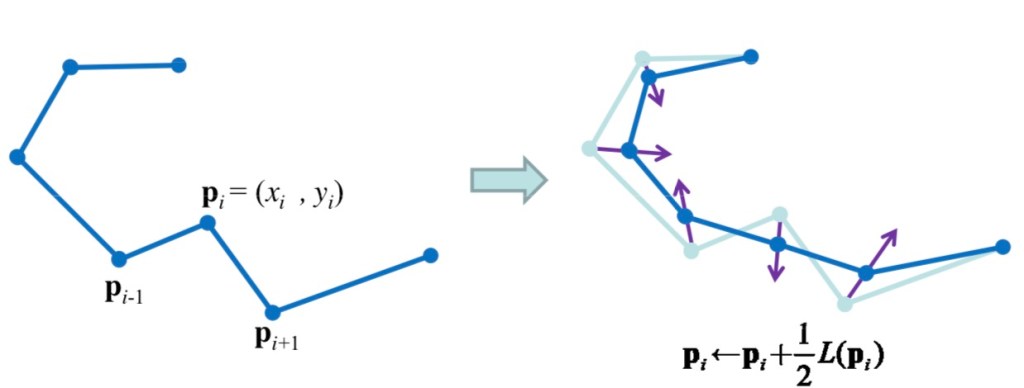

Laplacian smoothing moves each vertex of a mesh in relation to its adjacent neighbours. This filter calculates the mean vector by adding the neighbouring vertices’ coordinates and dividing that by the number of neighbours.

...

for (int vertexIndex=0; vertexIndex< meshVertices.Length; vertexIndex++)

{

// Find the meshVertices neighboring vertices

adjacentVertices = MeshUtils.findAdjacentNeighbors (meshVertices, t, meshVertices[vertexIndex]);

if (adjacentVertices.Count != 0)

{

dx = 0.0f;

dy = 0.0f;

dz = 0.0f;

// Add the vertices and divertexIndexde by the number of vertices

for (int j=0; j<adjacentVertices.Count; j++)

{

dx += adjacentVertices[j].x;

dy += adjacentVertices[j].y;

dz += adjacentVertices[j].z;

}

wv[vertexIndex].x = dx / adjacentVertices.Count;

wv[vertexIndex].y = dy / adjacentVertices.Count;

wv[vertexIndex].z = dz / adjacentVertices.Count;

}

}

return wv;

...Code example from here: Mesh Smoother (Unity 3D)