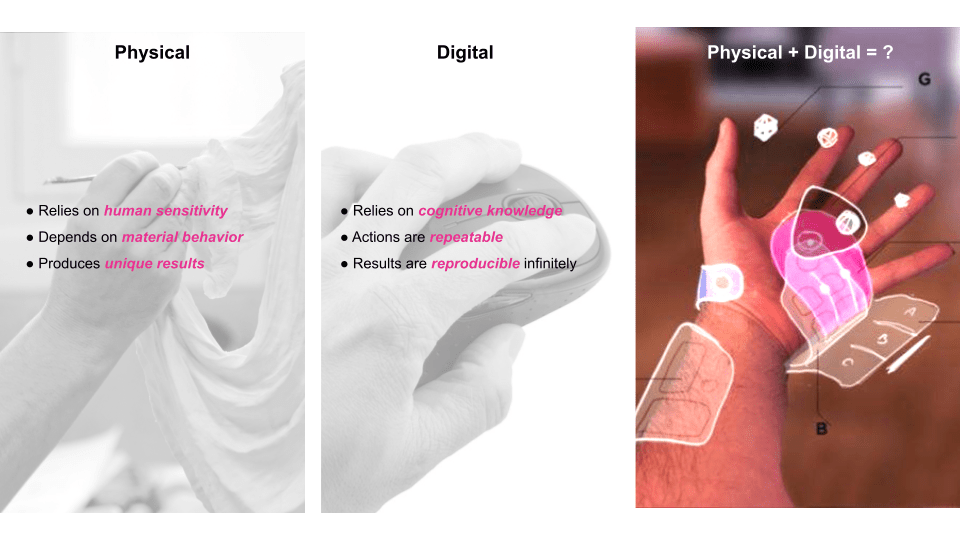

This project explores augmented reality technology to define a sculpting process that links the physical craft with methodologies from 3D modelling software. The output consists of an augmented reality app – SculptAR – that applies concepts from multimodal interactivity studies to implement gesture and voice recognition methods. The aim is to integrate these inputs and define augmented sculpting as an engaging and interactive experience that merges physical and digital skills. Furthermore, this project wants to highlight the emergence of possibilities for developing interactive experiences that include various human senses.

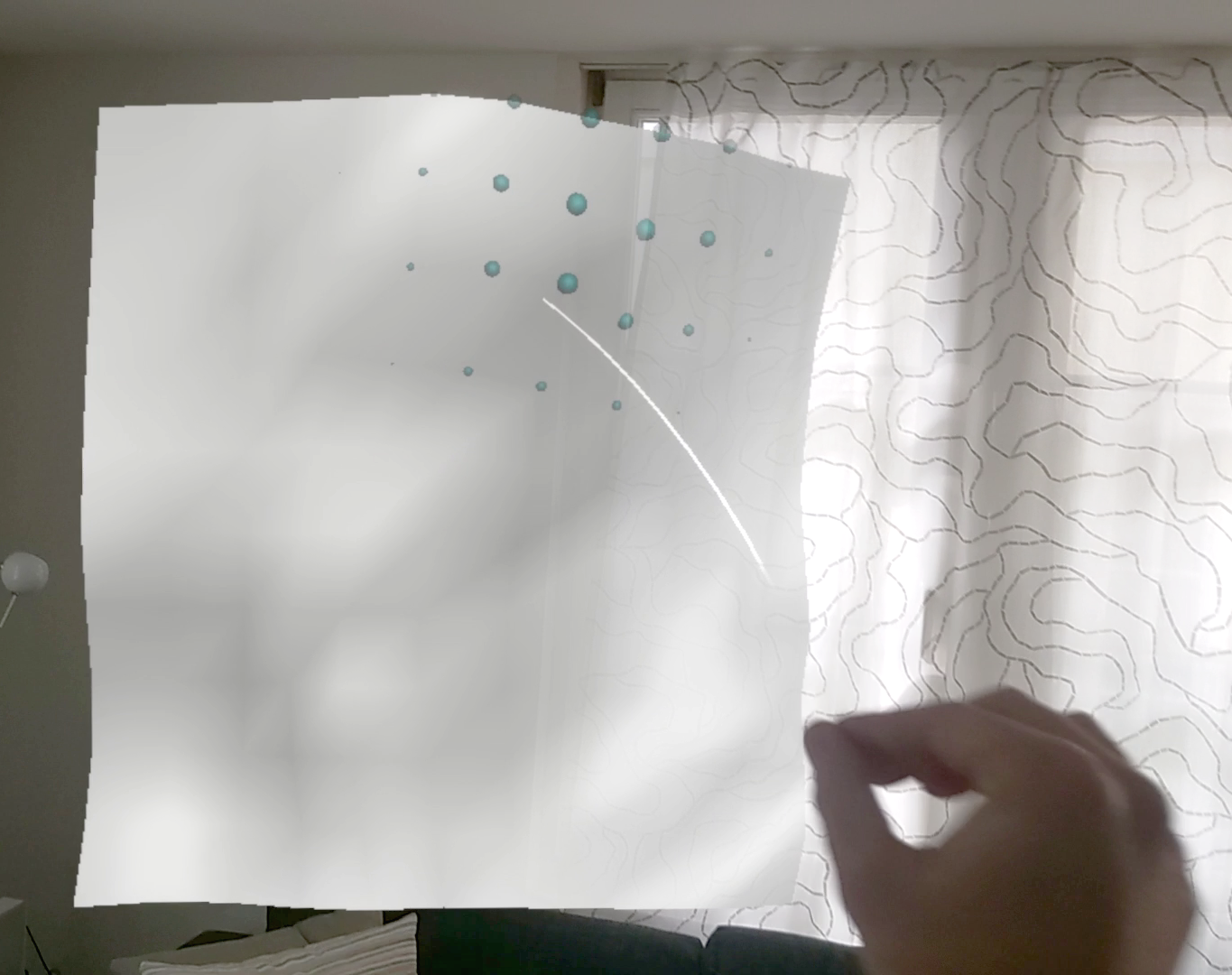

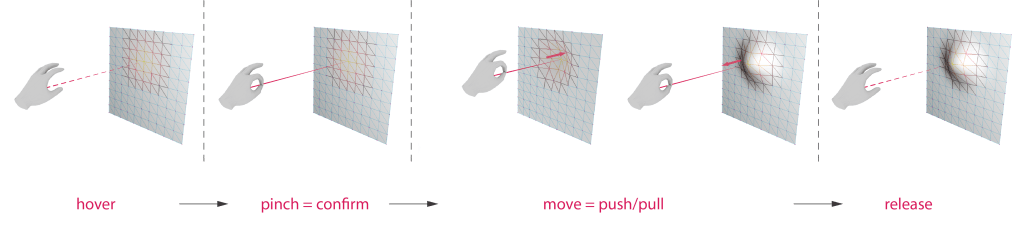

The sculpting interactions build upon the capability of the headset to track the hands’ position continuously. The software determine the relationship (distance, rotation) between the physical hand and the digital object. In SculptAR, two invisible rays project outwards from the user’s palms. Whenever these rays recognise the mesh to sculpt, a function will calculate the distance between the hand and the object. This information is fundamental for sculpting since the hand’s proximity to the mesh determines the user’s intention of pulling/pushing the geometry.

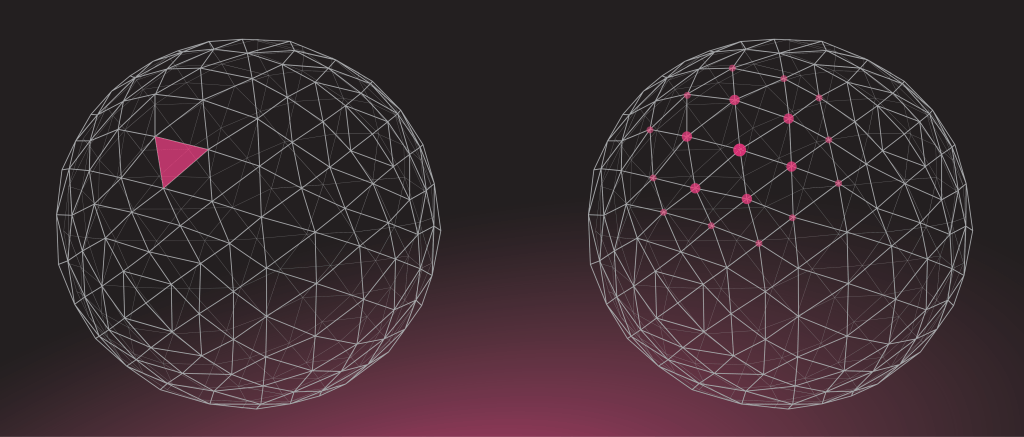

SculptAR provides two main sculpting modes that differ primarily by the effect they produce on the sculpted object. The first, Sculpt Faces, utilises the ray from the user’s hand to determine the position of the selected mesh face. In this operation, the vertices adjacent to the highlighted face react to the same displacement force, creating a more jagged effect, ideal for creating the overall form. On the contrary, the second sculpting option, Sculpt Vertices, provides a more uniform manipulation of the mesh. This approach iterates through the mesh vertices and determines their distance to the user’s hand position. Once the program registers the sculpting confirmation, the force applied to the vertices decreases proportionally to their closeness to the manipulated area.